The history of AI (Artificial Intelligence) is rather short and shows that this field has progressed well in about sixty years. Indeed, we have gone from the first computer that makes some calculations to machines like Watson, Deep Blue and AlphaGo able to compete and win against the best Chess players and the best Go players from around the world. Although the AI field has experienced highs and lows, it has not prevented the AI from progressing globally. Advances in algorithms, increased computing power of computers, and data science have helped advance AI in recent years.

Web giants such as Google, Facebook, IBM, Microsoft and Amazon are all investing in this field and building teams of AI experts to carry out R&D. For example, Facebook has seen the potential of AI, it has recently opened an AI research center, mainly for virtual reality around their Oculus VR headset and also to develop voice and image recognition tools.

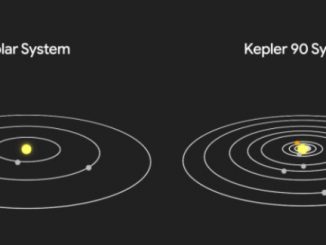

In recent times, we hear a lot about AI (Artificial Intelligence) with machine learning (one of the branches of AI) and deep learning (a machine learning technique that uses artificial neural networks). Deep learning approaches “strong” AI because it is based on learning, that is, it stores new data and modifies its own functioning. This technique has proven itself as shown by AlphaGo which is a computer program based on deep learning, developed by Google DeepMind, and which has beaten one of the best Go players of the world. The programming of a Go player is much more difficult than a chess player because the game of Go presents much more possible combinations than the game of chess. IBM’s supercomputer, Deep Blue, which defeated the world chess champion, used an exhaustive search program, in other words, testing all possible solutions. The exhaustive search is a good way to have a high computing power, which was the case with a supercomputer, but this technique has a defect, which is the number of solutions to test and a great computing power. If this number of solutions is very large, like the game of Go, the exhaustive search is inefficient because requires too much computing power and a consequent execution time. Therefore, the interest of improving and creating new algorithms, to do better with less computing power. Deep learning is for the time being a good solution to solve some problems and is approaching the strong AI.

However, the main objective of strong AI is to create a machine that is capable of thinking, having feelings, that is identical to the functioning of the cognitive system of the human being. I think we are still a long way off, even though the AI has made a lot of progress. Perhaps it will be necessary to return to the basics of the AI, in order to reach a true strong AI or perhaps it is not possible to reach a true strong AI. The weak AI differs from the strong AI by the fact that the computer program simulates intelligence. For example, Watson is an AI computer program that is close to the weak AI because it simulates intelligence, it is not a program that learns, but that is based on text search algorithms, it finds the text but does not understand it. Watson is actually a computer program developed by IBM, which uses the Hadoop framework that allows the program to browse a very large amount of locally available data (about 200 million pages) very quickly (less than 3 seconds). Watson was faced with two human opponents in a game show “Jeopardy!” and won the game show by answering questions formulated in natural language. As Watson is able to understand natural language and able to quickly find an answer, it has been used in the medical sector by assisting doctors. For example, a doctor from the University of Tokyo and his Department of Medical Sciences used the Watson program to help him diagnose a rare case of leukemia and in a few minutes the program found the true cause. The doctors estimated that it would take them two weeks to find the cause.

The possibility of creating a consciousness in a machine is still uncertain. The machines work in binary, they calculate only with 1 and 0, whereas the human brain is much more complex and work with neurons. Today, we are unable to build an artificial brain. Some constraints have been issued by some experts such as the fact that consciousness is proper to living organisms, that machines do not have the appropriate language to have an intelligence similar to the human or that a machine can only calculate and not think. However, I think it is still early to affirm these constraints to the AI, it is not really clear what is the consciousness and the history of human technology has often shown that technological problems considered as impossible to solve have been resolved later.

Today, some software with scalable algorithms are very sophisticated, because they can analyze and process a large mass of data (Big Data). For example, in the medical sector, the software can study patient records and lead to a diagnosis sometimes better than the doctor. In these softwares, there is no consciousness, no autonomous intelligence, this is more of good programming. Some specialists say that when the computational capacity of a machine exceeds the level of complexity of the human brain, a consciousness will emerge in the machine. I have always wondered what this statement was based on, it seems to me to be a hypothesis today rather than an assertion and is more a matter of theory for the moment. Personally, if it was possible to put a consciousness in a machine, it would seem dangerous if this machine is connected to the network. I think it would take a control of this machine, only allow the machine to have access to a closed and human-controlled network, and create a simple system capable of quickly disabling this strong AI in case. In fact, what I fear is that if you put a strong AI on the global network, that is, an AI capable of thinking and being autonomous is that she becomes uncontrollable and can cause serious damage in the world.

AI is able to learn quickly, much faster than human, many experiments have shown that a helicopter-robot is able to learn in half an hour with its evolutionary algorithm, to make perfect loopings, than a pilot is not able to do. In fact, when we launch these algorithms based on machine learning, we know that machines learn but in details, we do not really know what is going on. Recently, as part of the Google Brain AI research program, two computers were able to create their own algorithms to communicate with each other in language unknown to humans. The ability of AI to learn very quickly and exceed human as well as incomplete understanding of AI tend to show a complete and autonomous AI will be uncontrollable if it is launched.

Many people working in AI have already issued some warnings about a complete and autonomous AI. Elon Musk, Bill Gates and Stephen Hawking issued their warnings and denounced the potential risks of a strong AI. For them, a strong AI created and deployed would be synonymous with the end of the human species. Many people also want to ban autonomous weapons, that is, autonomous robots capable of killing humans.

I often hear about the three laws of Asimov, which protect the human from the machine, but in reality, none of these laws is implemented in robots.

Faced with the progress of AI, the ethical aspect is inevitable. I will illustrate my remarks with a concrete example of intelligent cars. Indeed, connected, intelligent and autonomous cars will surely see the light in the near future. However, as in any traffic, there can be inevitable accidents, the question I would ask is how the intelligent car will respond to an unavoidable accident, will it have to sacrifice the passengers of the car or protect pedestrians?

Hence the question of ethics in all this, how will the car be programmed to deal with this type of situation?

To conclude, I consider that a completely autonomous AI without control is dangerous for humans. I see AI as a system to help us solve problems, and help people.

Be the first to comment